Post Training Techniques Make Smarter, Cheaper, and Deployable AI for the Enterprise

Dec 11, 2025

Next Generation Stage

Next Generation

Fine-tuning open-source LLMs is no longer experimental, it’s the fastest path to production-grade performance, lower costs, and domain-specific accuracy.

In this live session, we’ll show how leading teams go from raw data → fine-tuning → distillation → deployment using Nebius Token Factory’s Post-Training service, powered by Papyrax, the fastest multi-node JAX framework in its class.

See how enterprise partners build custom, high-speed, cost-efficient models ready for real-world use.

What you’ll learn

- When to prompt, fine-tune, or distill and how to decide.

- Which open-source models (DeepSeek V3, GPT-OSS 120B, Qwen3 Coder 480B, Kimi) fine-tune best.

- How to prepare and clean production data for training.

- Running efficient LoRA, QLoRA, and full fine-tuning on multi-node clusters.

- Distillation workflows that turn 100B models into fast, low-cost students.

- 1-click deployment with built-in evals, autoscaling, and zero-retention inference.

- You’ll leave with practical templates, dataset examples, benchmark results, and deploy-ready code.

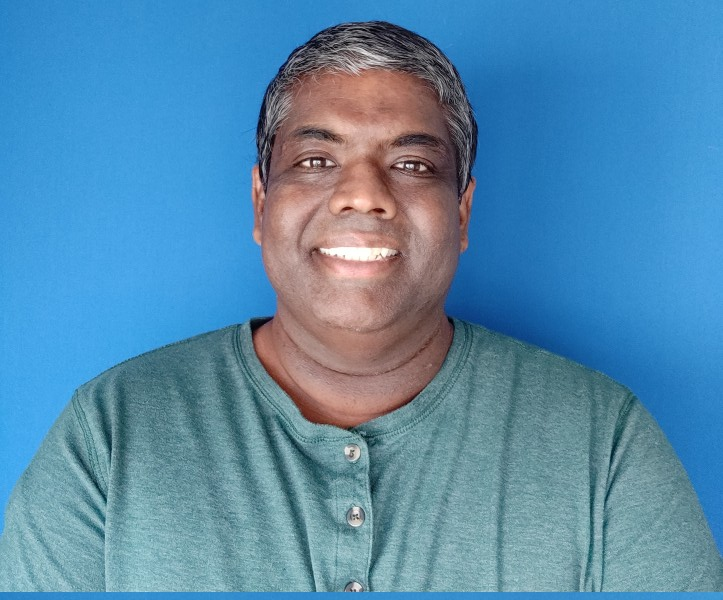

Speakers

Session Type

Presentation

Content Focus

Strategy

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

.svg)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

.jpg/fit-in/500x500/filters:no_upscale())

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)